In the frenetic, almost frantic world of modern software development, it’s easy to run right past good, solid practices. Newer is better, almost by definition. Often the worst place to be, at least career-wise, is defender of the past. A.I. is a good example. Symbolic systems are seen as stone-age and the headlong rush into generative systems and Large Language Models are a siren song. However, a moment’s pause may be in order.

Modern methods may indeed be the correct course, but we didn’t get here by chance or luck. Something came before. It takes much more than labeling things to fully grok them. Consideration from first principles can re-calibrate one’s perspective. This was a touchstone of scientists like Richard Feynman and Michael Faraday. Feynman advised that being able to create something, or at least explain it in simple terms, showed real understanding well beyond just knowing names and definitions. Faraday once said to students:

Do not refer to your toy-books, and say that you have seen that before.

Answer me rather, if I ask you, have you understood it before?

The best way to learn how to bake a cake is to actually bake a cake. Perhaps even several of them. Unfortunately, a lot of modern programming languages and frameworks lean heavily on upfront doctrine and formality. We’re asked to ‘trust the experts’ and spend months or years learning from ‘toy-books’ (sometimes written and promoted by those very same experts). That’s a very big risk, with a very long-deferred payoff. Why not first spend a small fraction of that time investing in some first principles thinking?

This brings me to a brief discussion of the Forth language. It’s been many years since I wrote any commercial code in Forth. But it’s been only a few minutes since I thought about a problem from first principles using Forth. What makes it unique, beyond all its quirkiness, Reverse Polish Notation (RPN), stack architecture, concatenative programming, extreme simplicity, etc., etc., is it’s syntonic nature. Forth enables one to think fully computationally while remaining fully human. That’s one heck of a neat trick. An hour of interactive thinking/exploration/coding in Forth can often produce the kernel of a solution, even to complex problems. Or even serendipitous treasures. No cutting & pasting, mysterious black boxes, or hyper-abstraction is needed. No team is needed. In fact, Isaac Asimov once wrote that isolation is crucial to deep thinking 1959 essay. The team will still be there in an hour, ready to work and interested in anything you can contribute.

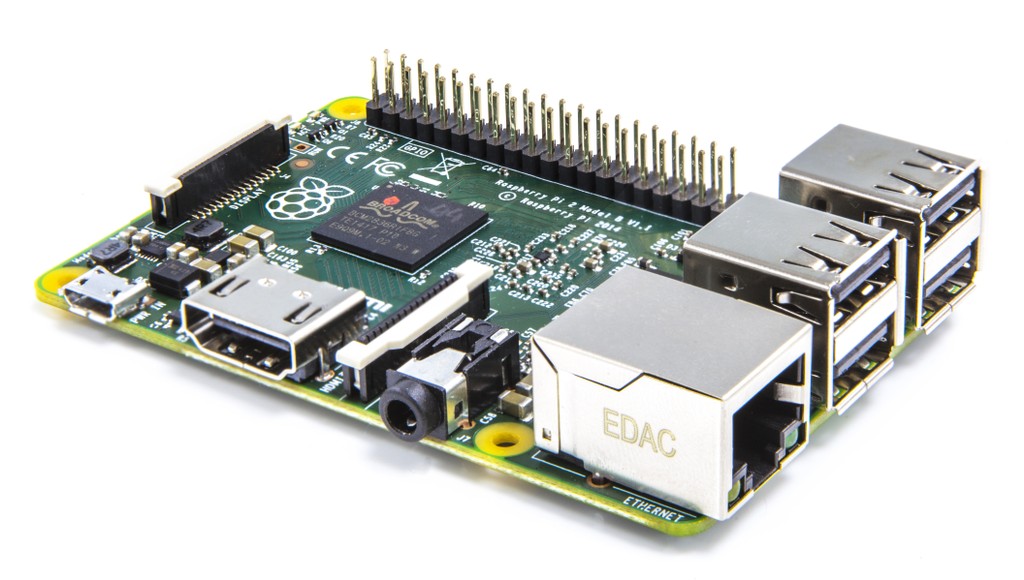

In more extended sessions, I call this mode of thinking “Forth ‘n Chips”. Working with integrated circuits, gates, and even transistors can be quite liberating. Getting away from your routine can open your mind. Some writers enjoy using pen and paper occasionally. It doesn’t mean they’re laptop-hating Luddites. Some hockey players need to leave the video room and go for a fun skate to clear their head and regain their muscle memory. It doesn’t mean they’re unthinking brutes. It means they know how to re-calibrate.

There’s a natural, almost biological feel to Forth. Concatenation is a more reptilian way of thinking than deductive reasoning. See here. Perhaps counter intuitively, subjective thinking can lead to stronger objective thinking. It’s a way to look a bit more carefully at what you’re rushing past.

Walking through a computer history museum, or browsing old magazines and manuals can give one perspective on the path that lead to this frenetic future. Remember that the homo sapiens that evolved on the grasslands to find food, avoid predators, and raise offspring had basically the same brain as we do today. Fashion comes and goes, but first principles remain.